Part 2: Preparing for a Regulated AI World

Artificial Intelligence (AI) today touches nearly all aspects of humanity and has sparked a fierce debate between the good and the bad it personifies. No matter which side of that particular debate one lands on, one thing that is abundantly clear – AI is here to stay. And while the potential of AI for good is immense, we would be remiss to ignore the very real concerns around misuse of AI.

Regulators around the world have been debating the need to regulate Big-Tech for years. That discourse has now reached fever pitch with recent developments in Large Language Models representing a significant step towards achieving Artificial General Intelligence (AGI) .

This document will explore the motivations behind regulating AI, the global regulatory landscape, key pillars around which regulations are being built, recommendations for companies, and important milestones to watch.

Key Concerns & Need for Regulating AI

“Garbage in, Garbage out” – or so goes the oft-cited adage ingrained into budding data scientists from day one. AI systems, at their core, are all about the data they are trained on and the way that data is modelled. And modern advances in AI such as LLMs need vast amounts of high quality data. Data that is not always ethically accessible.

Moreover, data often reflects the biases of the source. So any inherent biases in the training data or flawed algorithms have the potential to be tremendously amplified by AI embedded in our daily lives that could lead to significant consequences for large populations. Moreover, as AI becomes an integral part of critical decision making processes, it is poised to impact our lives and livelihoods at a scale previously unimaginable.

So naturally, concerns around fairness, safety and accountability have rightly been at the forefront of any AI related conversation.

To address these concerns, governments and regulatory bodies around the world have started developing frameworks and regulations to govern the development, deployment, and use of AI systems.

Foundational Principles of AI Regulation

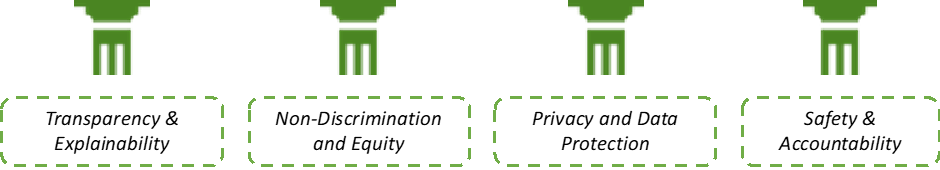

These pillars include:

- Transparency & Explainability_ – Regulations aim to promote transparency in AI systems, ensuring that AI driven decisions are not “black-box” i.e. users understand how and why AI contributes to outcomes that impact them. Explanations should ideally be provided in clear and accessible language, enabling democratized understanding of the logic underlying the AI system’s decision.

-

Non-Discrimination and Equity – Regulations aim to prevent algorithmic discrimination and ensure equitable design and use of AI systems. Proactive steps, such as diverse community consultations, robust testing, risk identification, and mitigation, during the AI development process are critical to ensure a fair and unbiased AI system.

-

Privacy and Data Protection – Regulations aim to protect individuals' and organisations privacy rights and establishing guidelines for ethical data usage by AI systems. In a increasingly data hungry landscape, it is critical to have guardrails around data ownership and access mechanisms.

-

Safety & Accountability – Regulations aim to ensure safety of those impacted by AI systems by holding AI system owners accountable and liable for adverse outcomes directly attributable to the AI systems.

Global Regulatory Framework and It’s Evolution

Regulation usually trails innovation and regulating a rapidly evolving field such as AI is itself a complex and evolving process. Various countries are actively developing frameworks to address the unique challenges posed by AI technology. Additionally, global organizations such as the United Nations and OECD are also developing a game plan when it comes to AI regulation. The current status of AI regulation across key countries / organisations is summarized below:

- United States – Both federal and state governments are engaged in regulatory efforts and at present there seems little co-ordination on the subject.

At the Federal level, regulations such as the “Algorithmic Accountability Act” and “Deep Fakes Accountability Act” aim to establish guidelines for AI system accountability. Meanwhile, The National Institute of Standards and Technology (NIST) has promulgated the “AI Risk Management Framework”.

While at the state level, Colorado has taken the lead by introducing “Algorithm and Predictive Model Governance Regulation”

While these regulations are focused on specific aspects of AI regulation and governance, The White House, in October 2022, has published the “Blueprint for an AI Bill of Rights” in association with academics, human rights groups, the general public and even large companies like Microsoft and Google. This document, which is not a law and more of a list of suggestions, outlines the current administration’s overarching objectives and framework for AI regulation

- European Union – The EU has been a global leader when it comes to data protection laws and has continued to lead the way when it comes to AI regulations with the “Artificial Intelligence Act” which is under development and expected to pass soon. This comprehensive act aims to set new standards for AI oversight in a bid to create what it refers to as “trustworthy AI.”

The AI Act also includes outright bans on automated systems that the E.U. has deemed to be unacceptable i.e. applications such as biometric surveillance, emotion recognition and predictive policing.

The EU's approach is centered around transparency, accountability, and human rights protection in AI development and deployment.

- India – India has taken a unique stance on AI regulation, focusing on safety over growth. While not planning to regulate the growth of AI, the Indian government has proposed the draft of the Digital Personal Data Protection (DPDP) Bill 2023, which aims to regulate the safety aspects of AI.

While still in its nascent stages, AI regulation in India is expected to pick up pace rapidly over the next few months.

A Founder’s Guide to Preparing for a Regulated AI Landscape

Like it or not, AI regulations are here to stay and will likely see plenty of evolution before stabilizing into a well-defined and well-understood set of laws. However, as partners to founders build the AI systems of tomorrow, we believe that it is imperative today for companies to build the foundations which will enable them to operate in an regulated AI landscape.

-

Explainable systems – Build your models and architecture in a way that you are able to explain to users how the given system works, the role of automation, why the system arrived at the decision it did, and who is responsible for the decisions it makes.

-

Test and correct for biases – Test your data and models for biases at all stages of AI development and monitor the system post deployment.

-

Ensure ethical data practices – Comply with existing data privacy laws as a starting point but beyond that, ensure that there are safeguards and consent mechanisms built in the way you acquire and use data. Moreover, you must be responsible for safeguarding sensitive information in your possession such as PII etc.

-

Human-in-the-Loop fallbacks – If someone decides they’d rather opt out from an automated system in favor of a human alternative, they should be able to do that. Especially, when such a fallback helps in ensuring accessibility and protection from especially harmful impacts.

-

Stay informed – Keep track of the evolving regulatory landscape in different jurisdictions and understand how it impacts your industry and AI applications

-

Engage with stakeholders – Collaborate with relevant communities and regulators to incorporate diverse perspectives and address concerns related to non-discrimination, privacy, and ethical use of AI

T.L.D.R.

AI regulations are rapidly evolving worldwide, driven by concerns about bias, fairness, privacy, and accountability. Governments and regulatory bodies are establishing frameworks to ensure responsible and ethical AI practices. Companies must adapt to the evolving regulatory landscape, comply with existing regulations, and implement responsible AI practices to mitigate risks and capitalize on the transformative potential of AI. By embracing ethical and accountable AI practices, companies can build trust, enhance their competitiveness, and navigate the complex regulatory environment.

Further Reading

-

https://hbr.org/2021/09/ai-regulation-is-coming

-

https://www.techtarget.com/searchenterpriseai/feature/AI-regulation-What-businesses-need-to-know

-

https://techcrunch.com/2023/04/05/india-opts-against-ai-regulation/

-

https://www.techlusive.in/artificial-intelligence/mos-it-says-govt-wont-regulate-applications-of-ai-in-india-1389083/

-

https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

-

https://www.reuters.com/technology/un-security-council-hold-first-talks-ai-risks-2023-07-16/

View All Perspectives

View All Perspectives